In many companies, AI and machine learning systems are built by scientists and engineers, rather than being designed by designers. As machine learning technology has become more available, it’s important that researchers and designers understand these tools so they can make great user experiences.

Although the technologies and tools are becoming more accessible, you still need to be able to code, so it’s best to have mixed teams where researchers, designers and developers work together.

That’s how we work here at Portable, where our human-centred approach to designing AI begins with understanding human needs in order to solve the right problems.

We want to solve real pain points for people, of course. At Portable we tackle very big, very complex problems on a daily basis. But to trial new techniques and technologies, internal projects are a great place to start. Later in this article you'll be walked through an example from one of our internal AI projects to demonstrate the steps we take in our approach.

Addressing a real human need

Failing to put the user at the centre risks you ending up with tech for tech’s sake.

Many AI systems today come out of trolling through large amounts of data, analysing trends, finding outliers and building really complex models. This tends to result in really accurate models but they’re not always that useful. Instead, by using a human-centred approach that takes the time to understand how users really interact with our products and services, we can identify user needs and solve them.

Failing to put the user at the centre risks you ending up with tech for tech’s sake. As Google’s Josh Lovejoy wrote in the fantastic article ‘The UX of AI’, 'If you aren’t aligned with a human need, you’re just going to build a very powerful system to address a very small — or perhaps nonexistent — problem.'

As researchers and designers, identifying user needs in AI is no different to any other system. It uses tools such as interviews and observation, collaborative workshops and co-design sessions, and mapping out user journeys. Once we understand the users' goals, we can search for the really challenging, mentally taxing, resource heavy kind of problems. These are the ideal problems to solve with AI, and they can really improve the user experience of our systems.

Guiding the intelligence

The second part of a human-centred design of AI is guiding the intelligence.

We’re trying to solve a problem the same way that humans do, just using machines. We can use our understanding of how humans solve the problem and break it into smaller chunks.

It’s like teaching a child to read. We don’t just give them a book. We start by learning the alphabet, teaching them how to sound out syllables and familiar words, and then move onto complex words, then sentences, before they can actually read the book. We do the same thing with AI. We start with the basics and build layers and layers until we solve the problem as a whole.

In our human-centred design approach, we as design researchers ask users to solve the problem. We get them to explain the steps they’re performing in detail, and along the way we try to find the information they rely on to solve these issues.

These will be important facts we’ll need to know later on as we’re building the data and training the model.

Trust is a core principle

The final piece of the puzzle is trust: a key ingredient in usable design. Building empathy with users enables this.

Through our design process, we’re asking users to solve problems and provide information. As user-centred designers, we’ll understand how users feel when they interact with our system. Knowing this will allow us to build the trust we need for them to be comfortable sharing the information the model will need later on. Trust is a key ingredient in usable design, and building empathy with users enables this.

Further, as designers, knowing what went into the AI allows us to have trust in the results that come out of it.

Trust is key in all digital systems, but never more so than where very sensitive, personal information is concerned. Portable’s ongoing work in online dispute resolution (ODR) systems leverages pre-existing procedural and case outcome data. Many of the dispute processes follow pre-defined processes and hence are ripe for machine learning. It’s one of the really exciting areas where human-centred design can not only reduce costs to taxpayers but improve justice for citizens.

An AI example: Timesheets

Nobody likes timesheets. How might we make the process of entering timesheets better?

At Portable we often use our internal tasks as ways to refine our process for clients. This way we’ve found a juicy problem for AI to solve: timesheets. Nobody likes timesheets. It’s admin that takes up a chunk of your day when you could be working on something else. How might we make the process of entering timesheets better?

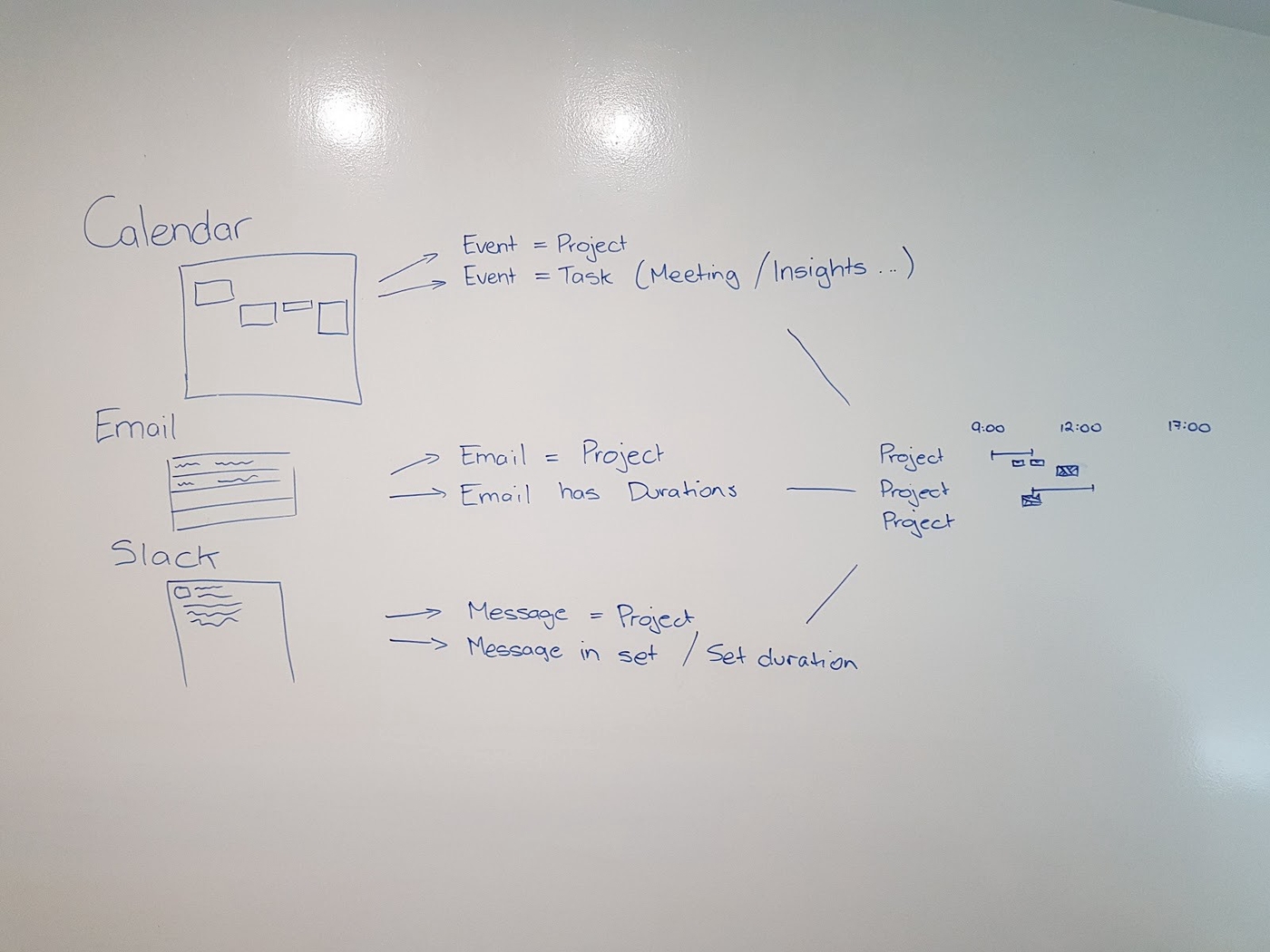

It can be a challenging task to go back through your day or week and document exactly what you were doing. But most of the systems we use throughout the day — email, calendar, Slack, Jira, Google Docs — record most of events required to paint a fairly accurate picture of how you spent your time. We have the data.

This is a good example of how technology will progressively change how we do our jobs, if not what jobs we do. Think computers that can grade essays with the accuracy of a professor but in a fraction of the time. Or machines capable of diagnosing illness with precision. Automation is redefining the future of work and the role of the worker within it.

Time sheets might not be as important as diagnosing cancer, but it is a real pain point. And that makes them a good place to experiment.

By understanding how people solve this problem, we can break it down. In this example, it has been broken down into half a dozen steps (thought there’s probably more). For example, a meeting logged in the calendar can have enough information for AI to figure out what the meeting was about before we enter it into our timesheet.

Solving one problem at a time is going to simplify the AI. It’s also going to give us a lot more visibility later, when we stack the layers together. Once we’ve solved all the chunks, we can join them together to solve the problem as a whole.

So now we have a problem to solve, we know how humans solve it, and we understand how to build that trust, let’s build some AI. We’ll do this in three steps.

1. Build the data set

In our first experiment, we’re not going to need a lot of data. A spreadsheet is a great tool to start with. Generally we’re looking at a hundred to a thousand records as a good starting point. Not too many as you may have to label every individual record manually.

There are two key terms to know:

- Feature: the data going into the model, in our case it’s a column in a spreadsheet

- Label: the information we want to get out of the model, it’s the answer

I had built a tool in a previous project to extract the calendar data and put it into a spreadsheet. Our features — the columns — are the event’s description, start time, end time and attendees. Note, that just because we have all this information doesn’t mean we have to use every feature in the model. In fact, we want to start with the most basic set of information we can and see how the model works.

(By the way: spreadsheets aren’t going to be particularly useful at scale, but when you’re doing early experiments and prototypes and figuring out how to build your model it’s helpful to be able to plug in spreadsheet after spreadsheet and get a list of predictions and see it all clearly. In the real world, we’d build a web API to allow us to integrate the AI model with any web or mobile app.)

Now we have our features, we’ll need to provide the answers. There are many ways AI is used. When we build AI using data with both features and labels, this is called 'supervised learning'. The two most common problems solved with supervised learning are regression and classification:

- Regression refers to predicting a value, such as the price of something, the length of time something will take, or any other numerical value.

- Classification is a way of predicting from a list of two or more items. A classic classifier for computer vision is AI that recognises objects in images. In our case, we’re going to be predicting the name of a project from a list of projects.

To do this, I’ve added an extra column to our spreadsheet called 'project', gone through line by line and labelled each row with the name of one of our projects. That’s it. We’ve got our first data set.

2. Train the model

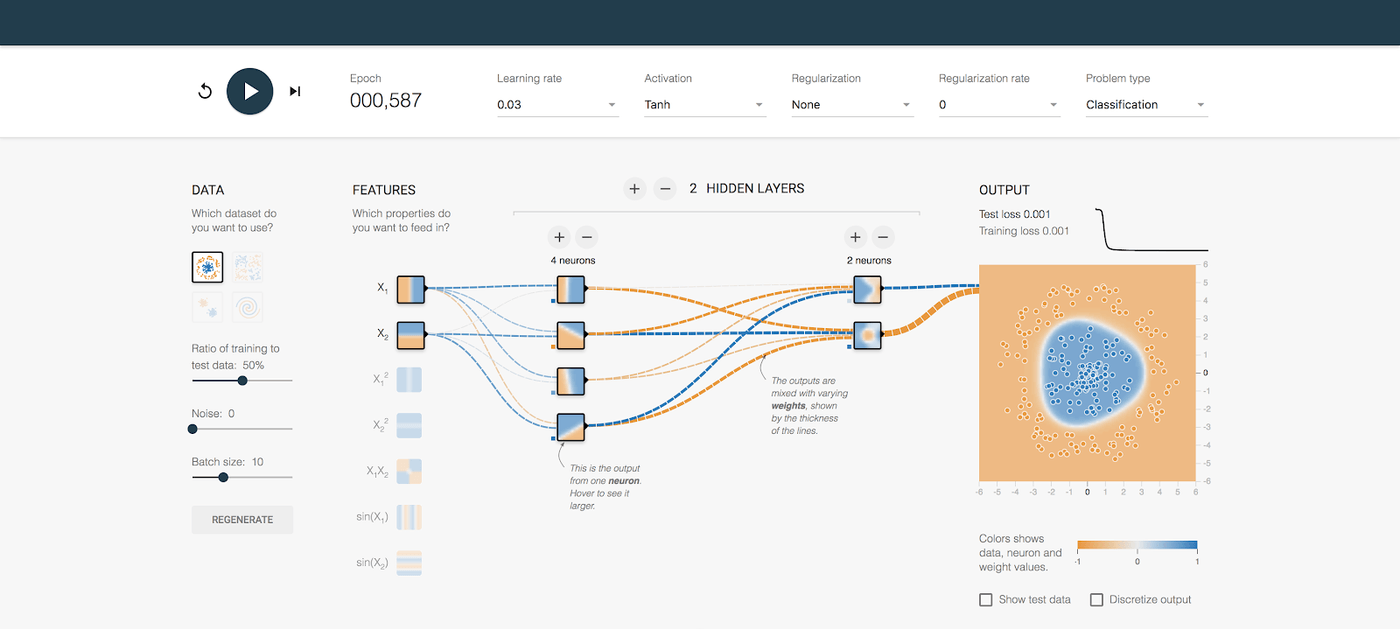

Training is the core of AI, and it’s best demonstrated with a visual example. TensorFlow Playground is a tool Google has made to demonstrate what a neural network is.

This is a neural network classifier. We used this same technology to do the predictions on the data. There are neurons in the middle, similar to the way the human brain works. Each has a series of values. What 'training' means is that the model is making fine adjustments to all the variables in each of the cells. It does this by splitting the data into two parts: training and evaluation. It makes adjustments on the training data and then evaluates its own performance on the evaluation set.

For a very simple model that only has two inputs and doesn’t have too much detail, it might only take a couple of minutes to run. I ran my sample for about 10 minutes and it got to about 60 per cent accuracy. For much larger models with millions of records and thousands of features, it can take hours, days and even weeks to run.

Once we’re happy with the amount of training the model has done, and the accuracy it’s producing, we can start to use it to predict new data. So I took another extract of my calendar and had it run predictions and got about 60 per cent accuracy from my model, a very basic model that only looked at one feature.

3. Make it smarter

We can go about improving our model in several different ways. A model is never 'finished' — we’ll continue to improve and experiment. Two significant ways to improve are by getting more data, and through 'feature engineering'. Feature engineering refers to how we actually put the data in, understanding how users work with it and how we can help the computer understand it better. A common example of feature engineering is when we tell an AI model that two pieces of information are combined. For example, two pieces of information like longitude and latitude mean far less when looked at independently than when they’re combined.

From a designer’s perspective, feature engineering is about understanding the data and how people interact with it so that the developers and anyone working with the model can put the data in the best format.

In this example, we had calendar items. Each one was entered by different people, and each person names and description of events differs slightly. Some people use the project code, some people use abbreviations, and some people use the full name of the project. This is a real-life example of feature engineering to understand the data and combine it in a way that combines all those records into a single one.

Like any design process, the way we improve is through experimenting and testing those experiments with multiple prototypes. We look at each one, where it failed, and why, and incorporate those learnings, along with knowledge of how the user works, into iterations and continually improve.

Download our free report on how you can overcome the barriers to data-driven design